The world has entered the 3rd decade of the 21st century, which can be termed as ‘the initial era of Artificial Intelligence (AI)’. Humans have made significant strides in technology. In the previous decade, people considered an 8 GB memory chip enough for their phones. However, with the rapid advancement of technologies, people currently find 128 GB as less storage for their phone. This exponential increase in data storage has also pushed data centres to store data in big-story buildings, which requires a robust infrastructure. In order to keep social media, business, email and AI online, physical buildings are required to store big data, which involves a lot of energy. Without energy, the data will not flow the way it is following through the Internet; you may not be able to upload your marriage videos on Instagram and the people who are using AI to get their answers or to create interesting posters, paragraphs and structure for their project will not be able to do anything. Just imagine the amount of energy being used to keep this whole infrastructure alive. At the same time, if someone is using his phone continuously for a few hours, it gets heated. Just imagine how much heat a data centre must be emitting and how much energy and infrastructure is required to keep these data centres cool in order to prevent overheating. The article will talk about recent experiments that have been performed by humankind to reduce dependency on energy, which prevents data centres from overheating. The article will also try to highlight the challenges, feasibilities and repercussions of such experiments.

In the ever-evolving world of digital technology, Microsoft’s Project ‘Natick’ has emerged as a groundbreaking experiment that takes computing to new depths. Imagine storing the world’s digital information in sealed containers beneath the ocean’s surface—it sounds like science fiction, but it’s becoming a fascinating reality. The concept is simple yet revolutionary: place data centres underwater for cooling to solve some of the most pressing challenges in our digital infrastructure.

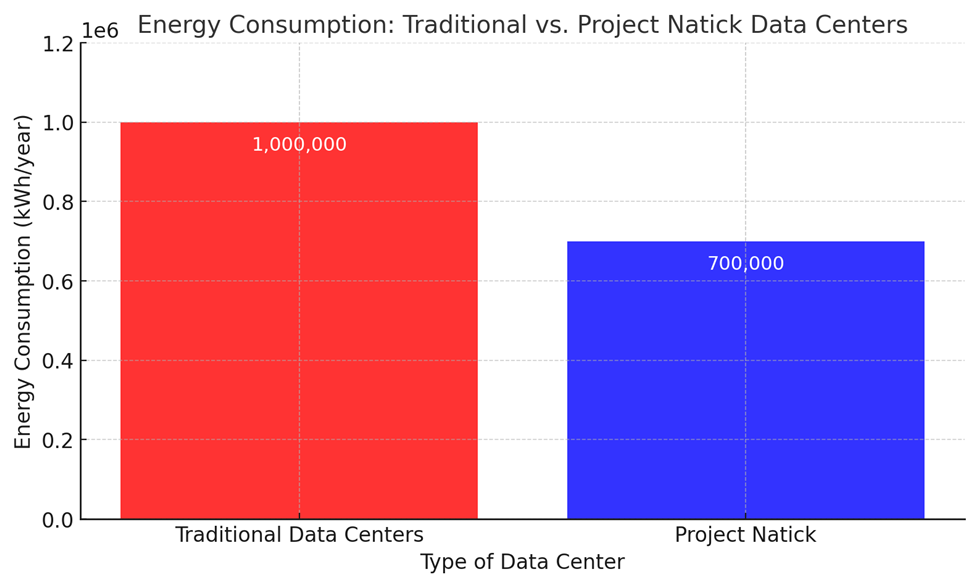

The bar chart compares the energy consumption of traditional data centres versus Project Natick.

Reduced Cooling Costs

At its core, this underwater approach offers some incredible benefits. Traditional data centres are energy-hungry monsters, consuming massive amounts of electricity just to keep their servers cool. By submerging these technological treasures in the chilly embrace of the ocean, companies can dramatically reduce cooling costs. Microsoft’s experiment off the Scottish coast showed remarkable results—only eight server failures out of 864 after two years, compared to the typical failure rates on land. It’s like finding a natural air conditioning system that works for free!

Construction Efficiency

The construction of these underwater data centres is surprisingly efficient. While a typical land-based data centre might take one to two years to build, these underwater pods can be ready in just 90 days. They’re essentially giant, sealed containers dropped into the ocean, designed to withstand extreme conditions. The locations near coastal areas offer another significant advantage: easy access to renewable energy sources like wind, solar, and tidal power. It’s like placing a power plant and a computer centre in one strategic location.

Future Benefits

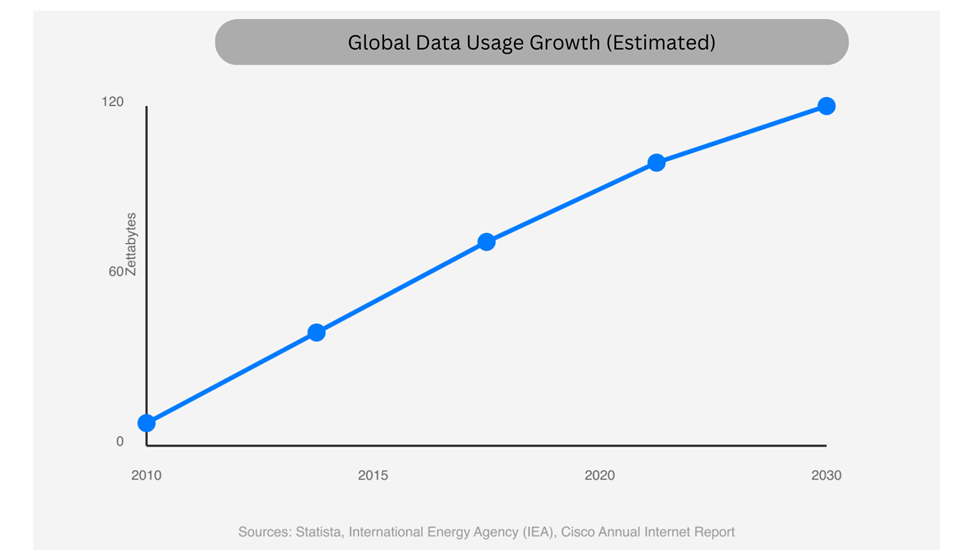

The global implications are fascinating. As artificial intelligence, cryptocurrency, and large language models drive an insatiable appetite for data, our energy consumption is skyrocketing. The International Energy Agency predicts data centres could demand an additional 590 terawatt-hours of electricity by 2026—equivalent to adding another entire country’s energy consumption to the grid. Underwater data centres represent more than just a cool technological experiment; they’re a potential solution to our growing digital energy crisis.

Maintenance and Accessibility

However, it’s not all smooth sailing. The challenges are as deep as the ocean itself. Maintaining these underwater facilities requires advanced robotic technologies, as human access is extremely limited. Security becomes a complex puzzle—underwater environments make these data centres vulnerable to potential physical attacks, and the dense water can actually make acoustic signals easier to disrupt. Tropical regions face an additional hurdle, as the warmer waters don’t provide the same natural cooling benefits as colder seas.

Environmental Factors

Environmental considerations add another layer of complexity. While the concept promises reduced energy consumption and minimal ecological disruption, there are concerns about long-term impacts. The potential for biofouling—where marine organisms like barnacles might attach to the equipment—could affect heat transfer and overall performance. Moreover, marine heat waves could pose unexpected challenges to these underwater technological havens.

Geographical Limitations for Few

For countries like India and other tropical nations, the underwater data centre isn’t a one-size-fits-all solution but an invitation to innovate. It requires local ingenuity, strategic investments in research, and a willingness to experiment with hybrid technologies. Underwater data centres in inland lakes or customized cooling methods could be the next frontier of sustainable computing.

As we stand on the brink of this technological revolution, underwater data centres symbolize more than just innovation—they represent human creativity in solving complex challenges. They remind us that the solutions to our most pressing technological problems might lie in unexpected places, sometimes quite literally beneath the surface. The future of digital infrastructure is not just about processing power but about finding smarter, more sustainable ways to support our increasingly connected world.

Through the Geopolitical Prism

The deployment of underwater data centres relies on access to coastal areas with suitable environmental conditions, such as cold water for natural cooling and proximity to renewable energy sources. Countries with extensive coastlines, such as Norway, the United States, or Australia, gain a natural advantage. These countries may emerge as underwater data infrastructure hubs and strengthen their global positions as technology leaders. On the contrary, landlocked or tropical countries may find it challenging to appropriate this technology without significant innovation, as with inland water bodies or alternative cooling methods. Such a disparity could thus constitute a “technology divide,” mirroring digital infrastructure differences. The second geopolitical perspective is an issue with digital sovereignty. Concerns that sensitive information may be accessed could discourage a country from storing important data in facilities situated close to or in another nation’s territorial waters. The data infrastructure will be the best option for starting attacks during a possible war situation. This could spark competition over where these data centres are built, with countries vying to control infrastructure to safeguard their digital autonomy. If data centres are installed in international waters—beyond the jurisdiction of any single country—it further complicates governance. Questions about oversight, regulatory frameworks, and jurisdictional disputes could emerge, particularly if the data centres serve multiple nations or house sensitive data.